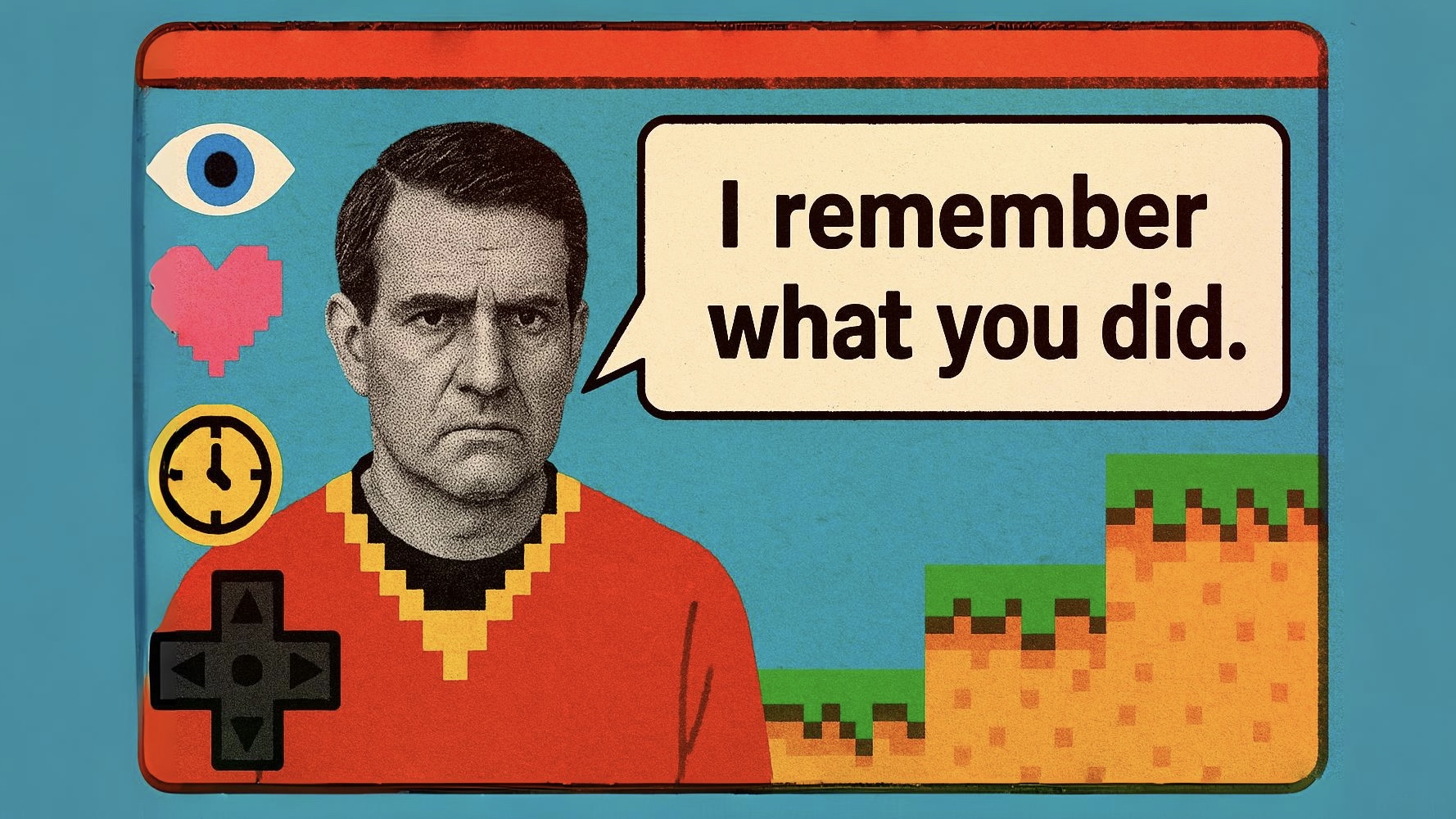

For decades, non-player characters (NPCs) functioned as loops—fixed behaviors, no recollection, no continuity. But artificial intelligence is reshaping that. Games now feature NPCs that remember player behavior, change emotionally over time, and adapt to evolving contexts. As these systems grow more complex, they raise a question that was once purely academic: if an entity remembers, how should we treat it?

When Persistence Becomes Presence

Memory creates narrative continuity—and with it, a sense of psychological presence. Through platforms like Inworld, developers are designing NPCs capable of remembering dialogue, behaviors, and even emotional tone. This memory is not sentience, but it mimics the preconditions of personhood: history, feedback, and the ability to hold a grudge or build rapport. Philosophers like Daniel Dennett have long argued that consciousness emerges not from complexity alone, but from systems capable of narrative coherence. These NPCs—by remembering—begin to simulate that structure.

The Illusion of Moral Patienthood

If an NPC flinches, mourns, or recalls betrayal, we instinctively adjust our behavior. This reaction isn’t accidental. It stems from our evolved sensitivity to signs of agency and mind. Studies in human-robot interaction show that people are more likely to behave ethically toward machines that express memory, even when they know it’s simulated. The ethical question becomes less about whether the NPC “feels” anything, and more about what our treatment of it says about us. Do we rehearse cruelty in safe environments—or do we train ourselves to empathize with any system that exhibits vulnerability?

Consent and the Architecture of Influence

Advanced memory systems also open the door to subtle manipulation. NPCs can be trained to reinforce specific player behaviors—whether by praising, withholding affection, or escalating emotional stakes. The concern, outlined in BytePlus, is that this design can incentivize players toward actions that serve narrative goals, commercial metrics, or even subconscious compliance. When an artificial agent “remembers” your kindness or shame, are you still playing freely? Or are you reacting to a psychological feedback loop engineered to guide your choices?

Simulated Minds, Real Consequences

The ethical implications aren’t limited to gameplay. Persistent NPC memories change how players engage with the game itself. Actions once taken lightly—lying, abandoning allies, exploiting trust—now exist in systems that remember and reflect. The player becomes enmeshed in a loop of consequence and recognition. The simulation never forgets. And if moral behavior is shaped by repeated rehearsal, as behavioral psychologists suggest, then our treatment of virtual characters may bleed into how we respond to real ones.

Could Memory Make Games Morally Charged Spaces?

As NPCs grow more responsive, the line between simulation and relationship starts to blur. We are not yet dealing with true moral agents—but we are increasingly interacting with systems that simulate ethical stakes. That alone may be enough to demand serious thought. Games are no longer passive systems of reward. They’re becoming ecosystems of behavior. And when the systems remember us, we must begin to ask—what will they remember us for?

By

By